Abstract:

This article critically examines the current state of evidence-based practice in psychotherapy, particularly in relation to trauma treatment. It challenges the dominance of Cognitive Behavioral Therapy (CBT) and medication-based approaches in research and practice, arguing that these methods often fail to address the root causes of trauma. The author contends that newer, less researched modalities like somatic and brain-based therapies may be more effective in treating trauma, despite their lack of representation in academic literature. The piece explores various factors contributing to this discrepancy, including research funding, methodological limitations, and the influence of pharmaceutical companies on study design and publication.

Key Ideas:

1. The McNamara fallacy in psychological research: overreliance on quantitative data while ignoring qualitative aspects of therapy.

2. Disconnect between evidence-based practices promoted in academic research and effective techniques used by experienced clinicians.

3. Limitations of CBT and medication in treating the root causes of trauma, as opposed to merely managing symptoms.

4. Financial and logistical challenges in researching more complex, intuitive therapy modalities.

5. Influence of funding sources, particularly pharmaceutical companies, on research agendas and outcomes.

6. Limitations of objective, quantitative measures in capturing the full scope of psychological healing and change.

7. Exclusion of complex cases and early dropouts from studies, potentially inflating the perceived efficacy of certain treatments.

8. The shift in academic publishing towards citation-driven research rather than clinically applicable findings.

9. The value of clinician intuition and flexibility in therapy, which is difficult to capture in standardized research protocols.

10. Challenges in measuring and researching subcortical brain activation and subjective patient experiences.

11. The need for a broader definition of “evidence” in psychotherapy research, including clinician and patient validation.

12. Criticism of the current research paradigm’s focus on symptom management rather than cure or prevention.

13. The potential bias in randomized controlled trials, particularly those funded by pharmaceutical companies.

14. The importance of considering the human element in psychotherapy research and practice.

15. A call for research that focuses on exploring unknown aspects of mental health treatment rather than confirming existing beliefs.

Article:

The McNamara fallacy, named for Robert McNamara, the US Secretary of Defense from 1961 to 1968, involves making a decision based solely on quantitative observations and ignoring all others. The reason given is often that these other observations cannot be proven. The fallacy refers to McNamara’s belief as to what led the United States to defeat in the Vietnam War—specifically, his quantification of success in the war (e.g., in terms of enemy body count), ignoring other variables. -From Wikipedia

I remember going into my first day of research class during my masters program. We sat and learned the evidence based practice system that the psychology profession is based on. Put simply, evidence based practice is the system by which clinicians make sure that the techniques that they are using are backed by science. Evidence based practice means that psychotherapists only use interventions that research has proved are effective. Evidence is determined by research studies that test for measurable changes in a population given a certain intervention.

What a brilliant system, I had thought. I then became enamored with research journals. I memorized every methodology by which research was conducted. I would peruse academic libraries at night for every clinical topic that I encountered clinically. I would select studies that used only the best methodologies before I would believe that their findings had merit. I loved research and the evidence based practice system. I was so proud to be a part of a profession that took science so seriously and used it to improve the quality of care I gave patients.

There was just one problem. The more that I learned about psychotherapy the less helpful I found research. Every expert that I encountered in the profession didn’t use methods that I kept reading about in research. In fact there were actually psychological journals from the nineteen seventies that I found more helpful than modern evidence based practice obsessed publications. They would come up in digital libraries when I searched for more information about the interventions my patients liked. Moreover I found that all of the most popular and effective private practice clinicians were not using the techniques that I was reading about in the scientific literature either. What gives?

Psychological trauma and the symptoms and conditions psychological trauma causes (PTSD, dissociative disorders, panic disorders, etc) are some of the most difficult symptoms to treat in psychotherapy. It therefore follows that patients with disorders caused by psychological trauma would be one of the most studied populations in research. So what are the two most commonly researched interventions for trauma? Prescribing medication and CBT or cognitive behavioral therapy.

One thing that most of the best trauma therapists in the world all agree on is that CBT and medication don’t actually process trauma at all, but instead assist patients in managing the symptoms that trauma causes. As a trauma therapist it is my goal to help patients actually process and eliminate psychological trauma. Teaching patients to drug or manage symptoms might be necessary periodically, but surely it shouldn’t be the GOAL of treatment.

I’m mixing metaphors but this image might help clarify these treatment modalities for those unfamiliar. Imagine that psychological trauma is like an allergy to a cat. Once you have an allergic reaction to the cat, a psychiatrist could give you an allergy medication like benadryl. A CBT therapist would teach you how to change your behavior based on your allergy. They might tell you to avoid cats or wash after touching one. A therapist practicing brain based or somatic focused trauma treatment would give you an allergy shot to help you develop an immunity to cats. The CBT patient never gets to know a cat’s love.

I don’t have time to explore here why therapy that gives patients scripted ego management strategies like CBT took over the profession after the nineteen eighties . If you have any interest in why check out my article Is the Corporatization of Healthcare and Academia Ruining Psychotherapy?. Suffice it to say that insurance and american healthcare companies pay for much of the research that is conducted and they like to make money. CBT and prescribing drugs are two of the easiest ways for those institutions to accomplish those goals.

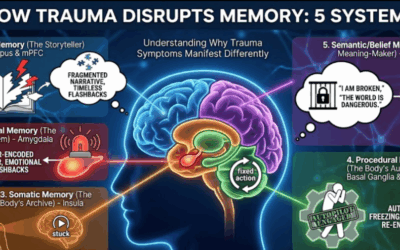

Many of the MOST effective ways to treat trauma use the body and deep emotional brain system to assist patients in processing and permanently releasing psychological trauma.

Unlike CBT the modalities that accomplish this are not manualizable. They can not be reduced to a “if they say this then you say that” script. Instead somatic therapies often use a therapist’s intuition and make room for the patient to participate in the therapeutic process. CBT on the other hand is a formula that a therapist is performing “correctly” or “incorrectly” based on their adherence to a manual. Right now hospitals are rushing to program computers to do CBT so they can reduce overhead. Yikes! Think of a therapy experience like the self checkout at Walmart.

If myself and most of the leading voices of the profession agree that newer brain based and body based therapy modalities are the future of trauma treatment then why hasn’t research caught up yet? To stop this article from becoming a book I will break down the failure of modern research to back the techniques that actually work in psychotherapy.

1. It’s Expensive – cash moves everything around me, cream get the money

Research studies cost tons of money and take tons of time. Researchers have to plan studies and get the studies cleared with funders, ethics boards, university staff, etc.. They then have to screen participants and train and pay staff. The average study costs about $45,000.

I would love to do a study myself on some of the therapy modalities that we use at Taproot Therapy Collective, but unfortunately I have to pay my mortgage. Studies get more expensive when you are studying things that have more moving parts and variables. Things like, Uh… therapy modalities that actually work to treat trauma. These modalities are unscripted and allow a clinician you use their intuition, conventional wisdom, and make room for a patient to discover their own insights and interventions.

Someone has to pay for those studies and those someones usually aren’t giving you that money without an agenda. Giant institutions are the ones most likely to benefit from researching things like prescribing drugs and CBT. They are also the ones that are the most likely to be in control of who gets to research what.

The sedative drugs prescribed to treat trauma work essentially like alcohol, they dull and numb a person’s ability to feel. Antidepressants reduce hopelessness and obsession. While this might help manage symptoms, it doesn’t help patients process trauma or have insight into their psychology. Antidepressants and sedatives also block the healthy and normal anxieties that poor choices should cause us to feel. Despite this drugs are often prescribed to patients that have never been referred to therapy.

For all the “rigorous ethical standards” modern research mandates, it doesn’t specify who pays the bills for the studies. Drug companies conduct the vast majority of research studies in the United States, and those drug companies also like to make money. Funnily enough most of the research drug companies perform tends to validate the effectiveness of their product.

Does anyone remember all the 90s cigarette company research that failed to prove that cigarettes were dangerous? All those studies still passed an ethics board review though. Maybe we should distribute research money to the professionals wha are actually working clinically with patients instead of career academics who do research for a living. At the very least keep it out of the hands of people who have a conflict of interest with the results.

This leads me to my next point.

2. We Only Use Research to Prove Things that we Want to Know – Duh!

The thing that got left out of my research 101 class was that the research usually has an agenda. Even if the science is solid there are some things that the commissioners of the studies don’t want to know. For example, did you know that the D.A.R.E. program caused kids to use drugs? Uh..yeah, that wasn’t what patrons of that research study meant to prove, so you never heard about it. It also didn’t stop the DARE program for sticking around for another 10 years and 10 more studies that said the same thing.

Giant institutions don’t like to be told that their programs need to change. They wield an enormous amount of power over what gets researched and they tend to research things that would validate the decisions that they make, even the bad decisions.

If you want research to be an effective guide for clinicians to use evidence interventions then you have to research all modalities of psychotherapy in equal measure. When the vast majority of research is funneled into the same areas, then those areas of medicine become better known clinically regardless of their validity. When very few models of therapy are researched, then those few models appear, falsely, to be superior.

Easier and cheaper research studies are going to be designed and completed much more often than research studies that are more complicated. Even when institutional or monetary control of research is not an issue, the very nature of research design means that it is trickier to research things like “patient insight” than it is to research “hours of sleep”. This leads me to my next point.

3. Objective is not Better – People are not Robots

CBT was designed by Aaron Beck to be a faster and data-driven alternative to the subjective and lengthy process of Freudian Psychoanalysis. Beck did this by saying that patient’s had to agree on a goal that was measurable with a number, like “hours of sleep” or “times I drank” and then complete assessments to see if the goal was being accomplished. Because of this CBT is inherently objective and research based. CBT is therefore extremely easy to research.

This approach works when it works, but a person’s humanity is not always reducible to a number. I once heard a story from a colleague who was seeing a patient who had just completed CBT with another clinician to “reduce” marijuana use. The patient, who appeared to be very high, explained that his CBT clinician had discharged him after he cut back from 6 to only one joint per day. The patient explained proudly that he had simply begun to roll joints that were 6 times the size of the originals.

That story is humorous, but it shows you the irony of a number based system invading a very human type of medicine. Squeezing people and behavior into tiny boxes means that you miss the whole person.

Patients with complex symptoms presentations of PTSD and trauma are often excluded from research studies because they do not fit the criteria of having one measurable symptom. Discarding the most severe and treatment resistant cases means that researchers are left with only the easiest cases of PTSD to treat. This in turn, falsely inflates the perceived efficacy of the model that you are researching.

Additionally, these studies usually exclude people who “drop out” of therapy early. In my experience people who leave therapy have failed to be engaged by the therapist and their model of choice. This falsely inflates the efficacy of models that discount patients that don’t continue to come to a treatment that they feel is not helping them. It is my belief that it is the therapists job to engage a patient in treatment, not the patient to engage themselves. Trauma patients often quickly know whether or not a treatment is something that is going to help them or whether or not the information that a therapist has is something that they’ve already heard.

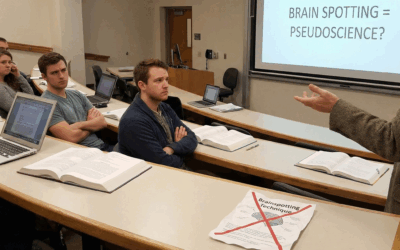

Trauma affects the subcortical regions of the brain, the same regions that newer brain based medicine is targeting. CBT is a cognitive based intervention that measures and seeks to modify cognition. Clinical research stays away from measuring subcortical activation and patients’ subjective feelings in favor of measuring cognition and behavior. Newer models of therapy like brainspotting and sensorimotor therapy are able to deliver results to a patient in a few sessions instead of a few months.

Brainspotting therapy changed my life, but after completing the therapy I didn’t “know” anything different. Brainspotting did not impart intellectual or cognitive knowledge. I was able to notice how my body responded to my emotions. I was also able to release stored emotional energy that had previously caused me distress in certain situations. Brainspotting did not significantly change my behavior and it would be difficult to quantify how my life changed with an objective number.

These kinds of subjective and patient centered results are difficult for our modern evidence based system to quantify. Researchers hesitate to measure things like “insight” “body energy”, “happiness”, or “self actualization”. However it is these messy and human concepts that clinicians need to see in research journals in order to learn how to do a human connection centered profession.

4. People Learn from People not Numbers – Publish or Perish

Once a research study is complete, the way that it is delivered to the professional community is through a research journal. Modern research journals focus on cold data driven outcomes and ignore things like impressionistic or phenomenological case studies and subjective patient impressions of a modality. The decision to do this means that the modern research journal is useless to most practicing clinicians. Remember when I said that I read academic journals from the 70’s and 80’s? I do that because those papers actually discuss therapy techniques, style and research that might help me understand a patient. Recent research articles look more like Excel spreadsheets.

The corporatization of healthcare and academia, not only changed hospitals, it changed Universities as well. The people designing and running research studies and publishing those papers have a PhD. Academia is an extremely competitive game. Not only do you have to hustle to get a PhD., you have to keep hustling once you do. How do you compete with other academics once you get your PhD?

The answer is that you get other people to cite your research in their research. You raise the status of yourself as an academic or your academic journal based on how many people cite your article in their article. The amount of times that a publication has its articles cited is called an impact factor and the amount of times that an author’s articles get cited is measured with something called an h-index or RCR. In my opinion many of the journals and academics with the low scores by these metrics are the best in the profession.

The modern research system focusing on these metrics has definitely not resulted in the creation of some page turner academic papers. In fact this competitive academic culture has led to modern journals being garbage that create careers for the people that write them and not change in the clinical profession. Academics research things that will get cited, not things that will help anyone and certainly not anything that anyone wants to read.

Often the abstract for a modern research paper begins like this “In order to challenge the prevailing paradigm, we took the data from 7 studies and extrapolated it against our filter in order to refine data to compare against a metric…”. They are papers written to get cited but not to be read. They are the modern equivalent of those web pages that are supposed to be picked up by google but not read by humans.

5. Good Psychology Thrives in Complexity – In-tuition is Out

Do you remember the middle school counselor that said “I understand how you are feeling” with a dull blank look in her eyes? Remember how that didn’t work?

Good therapy is about a clinician teaching a patient to use their own intuition and the clinician using their own. It is not about memorizing phrases and cognitive suggestions. The best modalities are ways of understanding and conceptualizing patients that allow a therapist to apply their own intuition. A modality becomes easier to study, but less effective, when it strips out all of the opportunity for personality, individuality and unique life experience that a clinician might need to make a genuine connection with a patient.

Research studies are deeply uncomfortable with not being able to control every variable that goes on in a therapy room. However, the therapy modalities that strip that amount of control from a clinician could be done by a computer. Why is it not okay to research more abstract, less definable properties that are still helpful and observable.

For example let’s say that this is the research finding:

“Clinicians who introduce patients to the idea that emotion is experienced somatically first, then cognitively secondarily in the first session had less patients drop out after the first session.”

or

“Clinicians that use a parts based approach to therapy (Jungian, IFS, Voice Dialogue, etc.) were able to reduce trauma symptoms faster than cognitive and mindfulness based approaches.”

If those statements are true then why does it matter HOW those clinicians are implementing those conceptualizations in therapy? If we know that certain strategies of conceptualization are effective then why does research need to control how those conceptualizations are applied?

If clinicians who conceptualize cases in a certain way tend to keep patients, then why does it matter if we can’t control for all the other unique variables that that clinician introduces into treatment. With a big enough sample, we can still see what types of training and what modes of thinking are working.

Modern research has become more interested in why something works instead of being content to simply find what works. If patient’s and clinicians with trauma all favor a certain modality, then why does it matter if we can’t extrapolate and control all the variables present in those successful sessions. Research has stayed away from modalities that regulate the subcortical brain and instead emphasized more measurable cognitive variables simply because it is harder to measure the variables that make therapy for trauma effective!

This is a whole other article, but the American medical community has become fixated on managing symptoms instead of curing or preventing actual illness. Research has become hostile to variables that contain affective experience or clinical complexity or challenging the existing institutional status quo. The concept of “evidence” needs to be expanded to include scientifically plausible working theories that have been validated by clinicians and patients alike. This is especially important regarding diagnoses that are difficult to broadly generalize like dissociative and affective disorders.

6. The not so Totally Random-ized Controlled Trial

Evidence based practiced posed a problem for the drug industry. However, the industry soon realized that evidence based medicine could work in their favor, as research published in prestigious journals held more weight than sales representatives. Today those same journals have become such a dominant force in clinical practice and are used as a cudgel, leaving little room for clinical discretion. This fueling the problems of over diagnosis and overmedication. Researchers and the profession at large are incentivized to push diagnostic criteria and the amount of diagnosis they confirm to sell drugs. The drug industry has a vested interest in legitimizing questionable diagnoses, broadening drug indications, and promoting excessive pharmaceutical solutions. Furthermore, corruption plagues clinical research. Academics essentially go into debt to do pay for the privilege of legitimizing pharmaceutical companies interests disguised as “randomized controlled trials” designed to have predetermined outcomes. The studies are often run impeccably but they are framed around predetermined conclusions. We must not just look at what research says but who moved the goal posts of what we research and who’s interests that framing prioritizes. Our research should focus on what we don’t know rather than what we think we know. Let’s dig into the natural course of diseases, explore alternative treatments that corporations have no vested interest in.

7. In Conclusion – Results

Psychotherapy is a modality that is conducted between humans and it is best learned about and conveyed in a medium that considers our Humanity. The interests of the modern research conducting institution and research publishing bodies largely contradict the interests of psychotherapy as a profession. The trends in modern evidence based practice make it exceptionally poor at evaluating the techniques and practices that are actually helping patients in the field or that are popular with trauma focused clinicians.

Addendum:

Before someone leaves a comment, yes, I know about CPT, CBT-D, and TF-CBT. I’ve read those books. I’m going to call CBT-BS before you post that comment. So before you ask, no, they don’t hold a candle to Brainspotting, Sensorimotor, Internal Family Systems, QEEG Neurostimulation, or any of the other effective brain based and somatic based medicine for PTSD and trauma.

Bibliography:

Wampold, Bruce E., and Zac E. Imel. The Great Psychotherapy Debate: The Evidence for What Makes Psychotherapy Work. Routledge, 2015.

Levitt, Heidi M., et al. “Qualitative Psychotherapy Research: The Importance of Considering Process and Outcome.” Psychotherapy, vol. 54, no. 1, 2017, pp. 20–27.

Barkham, Michael, and Gillian E. Hardy. “The Evaluation of Psychological Therapies: Developing a Rigorous Approach.” Clinical Psychology & Psychotherapy, vol. 1, no. 1, 1994, pp. 3–14.

Norcross, John C., and Bruce E. Wampold. “Evidence-Based Therapy Relationships: Research Conclusions and Clinical Practices.” Psychotherapy, vol. 48, no. 1, 2011, pp. 98–102.

Hollon, Steven D., and Aaron T. Beck. “Cognitive and Cognitive-Behavioral Therapies.” Bergin and Garfield’s Handbook of Psychotherapy and Behavior Change, 6th ed., edited by Michael J. Lambert, Wiley, 2013, pp. 393–442.

Further Reading:

Frattaroli, Eva. Healing the Soul in the Age of the Brain: Becoming Conscious in an Unconscious World. Penguin, 2001.

Shapiro, Francine. Eye Movement Desensitization and Reprocessing (EMDR) Therapy, Third Edition: Basic Principles, Protocols, and Procedures. Guilford Press, 2017.

Levine, Peter A. Waking the Tiger: Healing Trauma. North Atlantic Books, 1997.

Ogden, Pat, and Janina Fisher. Sensorimotor Psychotherapy: Interventions for Trauma and Attachment. W.W. Norton & Company, 2015.

Van der Kolk, Bessel A. The Body Keeps the Score: Brain, Mind, and Body in the Healing of Trauma. Penguin Books, 2014.

Grand, David. Brainspotting: The Revolutionary New Therapy for Rapid and Effective Change. Sounds True, 2013.

Barlow, David H. “Unraveling the Mysteries of Anxiety and Its Disorders from the Perspective of Emotion Theory.” American Psychologist, vol. 55, no. 11, 2000, pp. 1247-1263.

Shedler, Jonathan. “The Efficacy of Psychodynamic Psychotherapy.” American Psychologist, vol. 65, no. 2, 2010, pp. 98-109.

Kazdin, Alan E. “Understanding How and Why Psychotherapy Leads to Change.” Psychotherapy Research, vol. 19, no. 4-5, 2009, pp. 418-428.

0 Comments