Picture this: A company called Second Sight Medical Products spent years convincing blind patients to have computerized implants surgically embedded in their eyes. The Argus II retinal prosthesis system promised to restore partial vision through a complex array of electrodes that stimulated the retina. Patients underwent invasive surgery, had their skulls opened, and electronics permanently attached to their visual cortex. Then in 2020, Second Sight looked at their balance sheet and decided there wasn’t enough profit in helping blind people see. They didn’t go bankrupt – they just pivoted to a different market. Hundreds of patients were left with obsolete hardware in their heads, no software updates, no technical support, and devices that started malfunctioning. The terms of service allowed it. The patients had no recourse. Their vision, quite literally, was held hostage by quarterly earnings reports.

Welcome to the future of healthcare technology.

Taproot Therapy Collective does not use AI or endorse it. We’re just aware of its effects on the industry, so we’re trying to make patients and providers aware of the risks.

The Great AI Gold Rush of 2024

The therapy world has gone AI-crazy. Every practice management software, every note-taking app, every scheduling platform now proudly displays an “AI-powered” badge like it’s a mark of sophistication rather than a red flag. Windows shoved Copilot into every corner of their operating system. Coding platforms promise AI will write your website. Therapy note software swears their AI assistant will revolutionize your documentation.

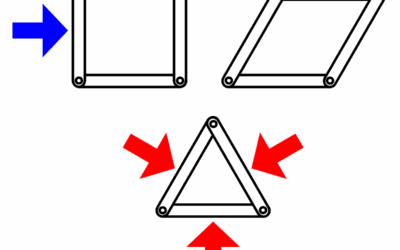

Here’s what they don’t tell you: These aren’t intelligent systems. They’re reverse search engines – massive probabilistic pattern-matching machines that guess what words typically go together based on billions of scraped documents. They don’t understand therapy, trauma, or the human condition. They understand that “debrief” often appears near “stabilized” in clinical notes, so they’ll confidently write that your patient “decompensated and stabilized in session” even if all they did was talk about their cat for 50 minutes.

How These Things Actually Work (Spoiler: They Don’t)

Let’s demystify the magic. Large Language Models are essentially glorified autocomplete on steroids. They’ve ingested the internet – every Reddit thread, every Wikipedia article, every publicly available therapy manual – and learned statistical patterns about which words tend to follow other words. When you type “The patient presented with,” the AI thinks, “Based on 10 million similar sentences, the next words are probably ‘symptoms of anxiety.'”

This works great for writing generic emails. It’s catastrophic for clinical documentation.

Consider this scenario: You conduct a parts-based therapy session. You mention debriefing the patient. The AI, having read thousands of notes where “debriefing” appears alongside “decompensation,” helpfully adds that your patient “experienced brief decompensation but stabilized by session end.” Congratulations, you’ve just documented a crisis that never happened. Your liability insurance will love that.

The Three-Headed Monster

Strip away the marketing glitter and you’ll find almost every AI service runs on one of three engines: OpenAI’s ChatGPT (bankrolled by Microsoft in a deal so twisted that Microsoft gets everything if OpenAI fails to meet certain mysterious conditions), Anthropic’s Claude (Amazon’s bet), or xAI’s Grok (Elon Musk’s fever dream). Maybe some brave soul is using China’s DeepSeek, but probably not in healthcare. That fancy therapy note AI with the soothing interface and HIPAA-compliant promises? It’s just ChatGPT in a prettier dress.

The Money Pit

Silicon Valley loves to lose money. Uber lost billions establishing a monopoly. Amazon bled cash for decades to crush competition. But here’s the difference: Those companies were building moats. AI companies are building money incinerators.

OpenAI lost $5 billion in 2024 on $4 billion in revenue. That’s not a typo – they spent $2.25 to make $1. Running these models costs $2 billion annually just in compute power. Training new ones? Another $3 billion. The cost of answering a single ChatGPT query ranges from $0.01 to $0.36. At current pricing, users would need to pay $700-800 monthly for these companies to break even.

The Dealer’s Dilemma

Here’s the part that should terrify you: There is no path to profitability. None. Zero. The math doesn’t work.

Even if we’re generous and only count the GPUs grinding away in data centers and the nuclear power plant’s worth of electricity they consume, the costs are astronomical. Each query burns through computational resources that would make 1990s supercomputers weep. The electricity bill alone for a single large model training run can hit millions. The GPUs? Those cost more than your house.

For AI companies to make money at current usage levels, they’d need to charge $1,000+ per month per user. That’s not hyperbole – that’s math. Your practice can’t afford that. Most practices can’t afford that. So what’s their plan?

The same plan every dealer uses: First taste is free. Get you hooked. Make you dependent. Then jack up the price when you can’t quit.

The Addiction Model

Let me put on my therapist hat and talk to you like I’d talk to a patient dabbling with substances:

“I hear you saying this AI makes your life easier. It saves you time. You can see more patients. The documentation practically writes itself. And right now it’s only $30 a month – what a deal! But let’s talk about what happens when the price goes up. When it’s $300. When it’s $1,000. When you’ve built your entire practice around it and can’t function without it.

That’s not innovation. That’s dependency. And the people selling it to you? They know exactly what they’re doing.”

These companies are following the addiction playbook:

- Tolerance: You need more features, more AI, more automation

- Withdrawal: Try working without it after six months – watch your productivity crash

- Escalation: Premium tiers, add-on features, “enterprise” pricing

- Denial: “I can quit anytime” (but you’ve forgotten how to write notes manually)

The Pusher’s Paradise

Why are they giving it away so cheap? Same reason dealers give free samples. They’re not selling software – they’re creating dependence. Every workflow you optimize around AI, every template you customize, every shortcut you take – that’s another hook in your practice.

They’re betting that by the time they need to charge real prices (remember: $1,000+ monthly just to break even on power and compute), you’ll be so deep in their ecosystem that you’ll pay anything. Your entire practice will run on their rails. Your notes, your scheduling, your billing – all dependent on a company that’s hemorrhaging money faster than a severed artery.

The tech press won’t tell you this. They’re too busy breathlessly reporting on the next funding round, the next valuation spike, the next promise of AGI just around the corner. Meanwhile, API access – the actual business use of these models – makes up less than 30% of OpenAI’s revenue. If there was a real industry here, wouldn’t businesses be the primary customers?

The HIPAA Nightmare

Your AI note-taking service promises HIPAA compliance. They even signed a Business Associate Agreement. Feel secure? You shouldn’t.

Most AI vendors are essentially middlemen slapping a healthcare interface on top of ChatGPT or Claude. They promise your data won’t be used for training. They swear it’s encrypted. They guarantee compliance. But when the money runs out – and it will – what happens to your patient data?

The terms of service you clicked through without reading probably includes clauses like “in the event of acquisition, merger, or asset sale, user data may be transferred to the acquiring entity.” Translation: When this startup fails and gets bought for parts, your therapy notes become someone else’s training data.

The Healthcare Tech Graveyard

Before we talk hypotheticals, let’s examine the bodies already buried. Healthcare technology companies have a spectacular history of leaving providers and patients in catastrophic binds – all perfectly legal under the terms they signed.

Practice Fusion: The $145 Million Betrayal

Practice Fusion offered “free” EHR software to small practices. The catch? They sold de-identified patient data to pharmaceutical companies and took kickbacks to push opioid prescriptions through their clinical decision support alerts. When the DOJ came knocking with a $145 million fine, the company was sold for pennies on the dollar to Allscripts – down from a $1.5 billion valuation to just $100 million. Thousands of practices had to scramble to migrate their data or risk losing it entirely. The terms of service? They allowed all of it.

The FTC Privacy Scandal

The same Practice Fusion tricked patients into thinking they were sending private messages to their doctors. Instead, these deeply personal health details – including medication questions, symptoms, and diagnoses – were published on a public review website. Patients wrote things like “Dr. [Name], my Xanax prescription that I received on Monday was for 1 tablet a day but usually it’s for 2 tablets a day” thinking they were communicating privately. The company’s terms technically disclosed this, buried in fine print.

MDLIVE and the Cigna Shuffle

When Cigna acquired MDLIVE, thousands of independent practitioners who relied on the platform for telehealth suddenly found themselves subject to new terms, new pricing, and integration with a massive insurance company’s systems. Patient data that once lived on an independent platform now resided within an insurance conglomerate’s ecosystem. All legal. All devastating for practices that built their virtual care around the platform.

These aren’t outliers. They’re the norm.

When the Music Stops

The venture capital spigot won’t flow forever. When investors realize that AI companies need to 10x their prices to achieve profitability – and that such prices would kill demand – the funding will dry up. Then what?

Scenario 1: The Sudden Shutdown

One Monday morning, you log in to find a cheerful message: “We’re pivoting to focus on enterprise solutions. Consumer services will sunset in 30 days. Download your data now!” Except the export function mysteriously doesn’t work, support doesn’t answer, and 30 days later, five years of session notes vanish into the ether.

Scenario 2: The Acquisition

“Exciting news! We’ve been acquired by MegaCorp!” Six months later, your specialized therapy features are deprecated, the interface is “simplified” (read: gutted), and your subscription price triples. The BAA? Still technically valid, just transferred to a company with 47 subsidiaries and a privacy policy longer than War and Peace.

Scenario 3: The Zombie Service

The company doesn’t die – it just stops living. Updates cease. Bugs multiply. Features break one by one. Support tickets go unanswered. The service limps along, technically functional but practically unusable, while you’re locked in by the thousands of notes you can’t export.

Scenario 4: The Slow Squeeze

This one’s insidious. Month by month, features move behind paywalls. First it’s “premium templates.” Then “advanced export options.” Then “priority processing.” Soon you’re paying $50, then $100, then $300 monthly for features that used to be free. But here’s the kicker – you legally own your data, but accessing it now costs extra. Downloading your own notes? That’s a “premium feature.” Want to migrate away? The export function only works with the “Enterprise Migration Package” at $5,000. Perfectly legal. Utterly predatory.

The Parts-Based Therapy Problem (And Others Like It)

AI’s inability to understand context creates specific vulnerabilities for different therapeutic modalities:

Parts-Based/IFS Therapy: The AI might interpret “parts work” as group therapy, document non-existent “alters,” or confuse therapeutic parts language with dissociative symptoms.

EMDR: Expect the AI to add bilateral stimulation to sessions where you just discussed the therapy conceptually, or document “complete trauma processing” after a preparation session.

Somatic Approaches: The AI will likely medicalize body awareness exercises, potentially documenting panic symptoms when a client simply noticed their breathing.

DBT: Every skills discussion becomes “patient practiced distress tolerance skills and demonstrated improved emotional regulation” – even if they didn’t.

Practical Survival Strategies

1. The Paper Trail

Start now. Export everything monthly. Print critical notes. Maintain local backups. Yes, it’s a pain. Yes, it defeats the purpose of going digital. But when your AI service vanishes overnight, you’ll thank yourself.

2. The Hybrid Approach

Use AI as a rough draft only. Never let it touch final documentation. Dictate your notes, let AI transcribe, then rewrite everything yourself. Time-consuming? Yes. Legally safer? Absolutely.

3. The Exit Strategy

Before adopting any AI service:

- Can you export all data in a usable format?

- What happens to your data if they shut down?

- Is the export function tested and functional NOW?

- Do you have a manual backup process?

4. The Legal Review

Have an attorney review:

- The terms of service change clauses

- Data ownership provisions

- Liability limitations

- Termination procedures

- Successor entity rights

5. The Old School Option

Controversial opinion: Maybe we don’t need AI for therapy notes. Therapists managed for decades with pen and paper, brief notes, and actual human judgment. The promise of saving 10 minutes per session isn’t worth risking your license, your patients’ privacy, or your practice’s viability.

Red Flags in Terms of Service

Watch for these poison pills:

- “We may modify these terms at any time with notice posted to our website”

- “User grants Company a perpetual, irrevocable license to all submitted content”

- “In case of insolvency, user data becomes an asset of the estate”

- “Company provides service ‘as is’ with no warranties, express or implied”

- “Arbitration clauses that waive your right to class action lawsuits”

The Uncomfortable Truth

The AI revolution in therapy isn’t a revolution – it’s a VC-subsidized illusion. These companies are betting they’ll become indispensable before the money runs out. They’re hoping you’ll be so dependent on their services that you’ll pay whatever they demand when the bills come due.

But healthcare isn’t ride-sharing. You can’t “disrupt” the therapeutic relationship. You can’t “10x” empathy. And you definitely can’t trust your patients’ most vulnerable moments to companies that measure success in burn rate and user acquisition costs.

What Happens Next

When the bubble pops – and it will – expect:

Wave 1: The small players vanish. Therapy-specific AI startups fold or get acquired. Services shut down with minimal notice. Data exports fail. Lawyers get rich.

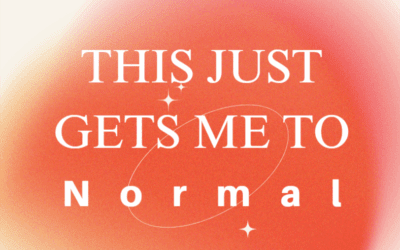

Wave 2: The survivors jack up prices. That $30/month service becomes $300. Then $500. Then “call us for enterprise pricing.” Features get paywalled. Support becomes “premium only.” The “free tier” disappears entirely.

Wave 3: Consolidation. Three or four major players divide the market. Innovation stops. Prices stabilize at astronomical levels. The promise of AI democratizing therapy tools becomes a joke no one remembers. You’re paying $1,000+ monthly for what used to cost $30, because what choice do you have? You’re hooked.

The Therapeutic Parallel

I tell my patients struggling with substances: “The drug always collects its debt. Always. Maybe not today, maybe not tomorrow, but eventually, it comes for everything it gave you and more.”

Same principle here. Every hour AI saves you now? You’ll pay for it later. Every shortcut, every automation, every “efficiency” – it’s all borrowed time. And the interest rate? It’s compounding daily.

Be aware. Be careful. Treat AI tools like you’d counsel a patient to treat that prescription they’re getting a little too fond of – with extreme caution, clear boundaries, and always, always an exit plan.

Action Items for Your Practice

Immediate:

- Audit all AI tools currently in use

- Review and understand all terms of service

- Implement manual backup procedures

- Test data export functions monthly

Short-term (3-6 months):

- Develop AI-free workflows as backup

- Train staff on manual documentation

- Build local storage solutions

- Create practice continuity plans

Long-term:

- Evaluate whether AI adds real value vs. risk

- Consider returning to proven, stable systems

- Invest in tools you control

- Build resilience over efficiency

The Historical Reality Check

Let’s talk probability. In the entire history of venture-backed technology companies, how many have survived burning $2.25 to make $1.00? Zero. Not one. Ever.

Companies with OpenAI’s burn rate trajectory have a 100% failure rate. WeWork burned $2 for every $1 in revenue – it imploded. Pets.com, Webvan, Kozmo.com – all torched cash at unsustainable rates and vanished. Even Uber, the poster child for “burn money to build a monopoly,” only burned about $1.50 per revenue dollar at its worst. And Uber had a clear path: eliminate drivers with self-driving cars (still hasn’t happened), crush competition, then jack up prices (happening now).

OpenAI has no such path. They can’t eliminate GPUs. They can’t reduce electricity consumption. They can’t make the models significantly cheaper to run without making them dumber. Physics and thermodynamics don’t care about disruption.

Here’s the actuarial table: Companies burning cash at OpenAI’s rate typically last 2-3 years post-peak funding. We’re in year 2. By historical precedent, your AI note-taking service has about 12-18 months left. Maybe 24 if they get one more desperate funding round. Plan accordingly.

The probability of ChatGPT “working out” based on historical precedent? Somewhere between zero and the chance of your patient actually reading your treatment plan. Both require magical thinking.

The Bottom Line

AI companies want you to believe they’re building the future of healthcare. In reality, they’re building elaborate Ponzi schemes funded by venture capitalists who’ve confused probabilistic text generation with intelligence. When the music stops – when the funding dries up, when the true costs become undeniable, when the companies pivot or fold or sell – you and your patients will be left holding the bag.

Your therapy practice survived before AI. It can survive after. The question is whether you’ll be prepared when the robots stop taking notes, or whether you’ll be like those Second Sight patients – dependent on technology that no longer has anyone behind it, holding desperately to promises that were never meant to be kept.

The time to prepare is now. Before the cheerful shutdown notice. Before the acquisition announcement. Before your patient data becomes someone else’s asset. Because in the end, the only thing artificial about AI might be the intelligence – and the only thing guaranteed is that someone, somewhere, is going to lose everything they trusted to these systems.

Don’t let it be you.

0 Comments