I. What is the Shannon Weaver Model

Claude Shannon (1916-2001) and Warren Weaver (1894-1978) were two American mathematicians and engineers whose collaborative work laid the foundation for modern information theory. Their groundbreaking research in the mid-20th century revolutionized our understanding of communication, paving the way for the digital age and profoundly influencing fields ranging from computer science and cryptography to linguistics and psychology.

This paper explores the lives and ideas of Claude Shannon and Warren Weaver, situating their thought within the historical and intellectual context of the 20th century and examining its enduring relevance for understanding the nature of information, communication, and technology. By engaging with Shannon and Weaver’s key concepts, such as information entropy, noise, and redundancy, and by considering their implications for fields such as psychology, anthropology, and media studies, this paper seeks to illuminate the significance of their work for navigating the challenges and possibilities of our increasingly information-driven world.

I like to think (and

the sooner the better!)

of a cybernetic meadow

where mammals and computers

live together in mutually

programming harmony

like pure water

touching clear sky.I like to think

(right now, please!)

of a cybernetic forest

filled with pines and electronics

where deer stroll peacefully

past computers

as if they were flowers

with spinning blossoms.I like to think

(it has to be!)

of a cybernetic ecology

where we are free of our labors

and joined back to nature,

returned to our mammal

brothers and sisters,

and all watched over

by machines of loving grace.–Richard Brautigan

43069

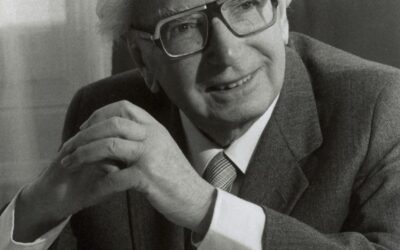

II. Biography of Claude Shannon

Claude Elwood Shannon was born on April 30, 1916, in Petoskey, Michigan. Growing up in Gaylord, Michigan, Shannon showed an early aptitude for mathematics and engineering, building model planes and a telegraph system to communicate with a friend’s house half a mile away.

Shannon earned bachelor’s degrees in electrical engineering and mathematics from the University of Michigan in 1936. He then went on to pursue graduate studies at the Massachusetts Institute of Technology (MIT), where he worked on Vannevar Bush’s differential analyzer, an early analog computer. It was during this time that Shannon wrote his groundbreaking master’s thesis, “A Symbolic Analysis of Relay and Switching Circuits” (1937), which applied Boolean algebra to the design of electrical circuits, laying the foundation for digital circuit design.

After completing his Ph.D. in mathematics at MIT in 1940, Shannon joined Bell Labs, where he would spend much of his career. During World War II, he worked on cryptography and secure communications systems, experiences that would later influence his work on information theory.

In 1948, Shannon published his seminal paper “A Mathematical Theory of Communication” in the Bell System Technical Journal, which laid the foundation for information theory. This work introduced concepts such as information entropy, channel capacity, and the fundamental theorems of data compression and transmission.

Throughout his career, Shannon made significant contributions to various fields, including cryptography, artificial intelligence, and computer science. He also had a playful side, inventing numerous devices such as a Roman numeral computer, a mind-reading machine, and a flame-throwing trumpet.

Shannon retired from Bell Labs in 1972 and joined the faculty at MIT. He continued to work on various projects and inventions until Alzheimer’s disease began to affect his health in the 1990s. Claude Shannon passed away on February 24, 2001, leaving behind a legacy that continues to shape our understanding of information and communication.

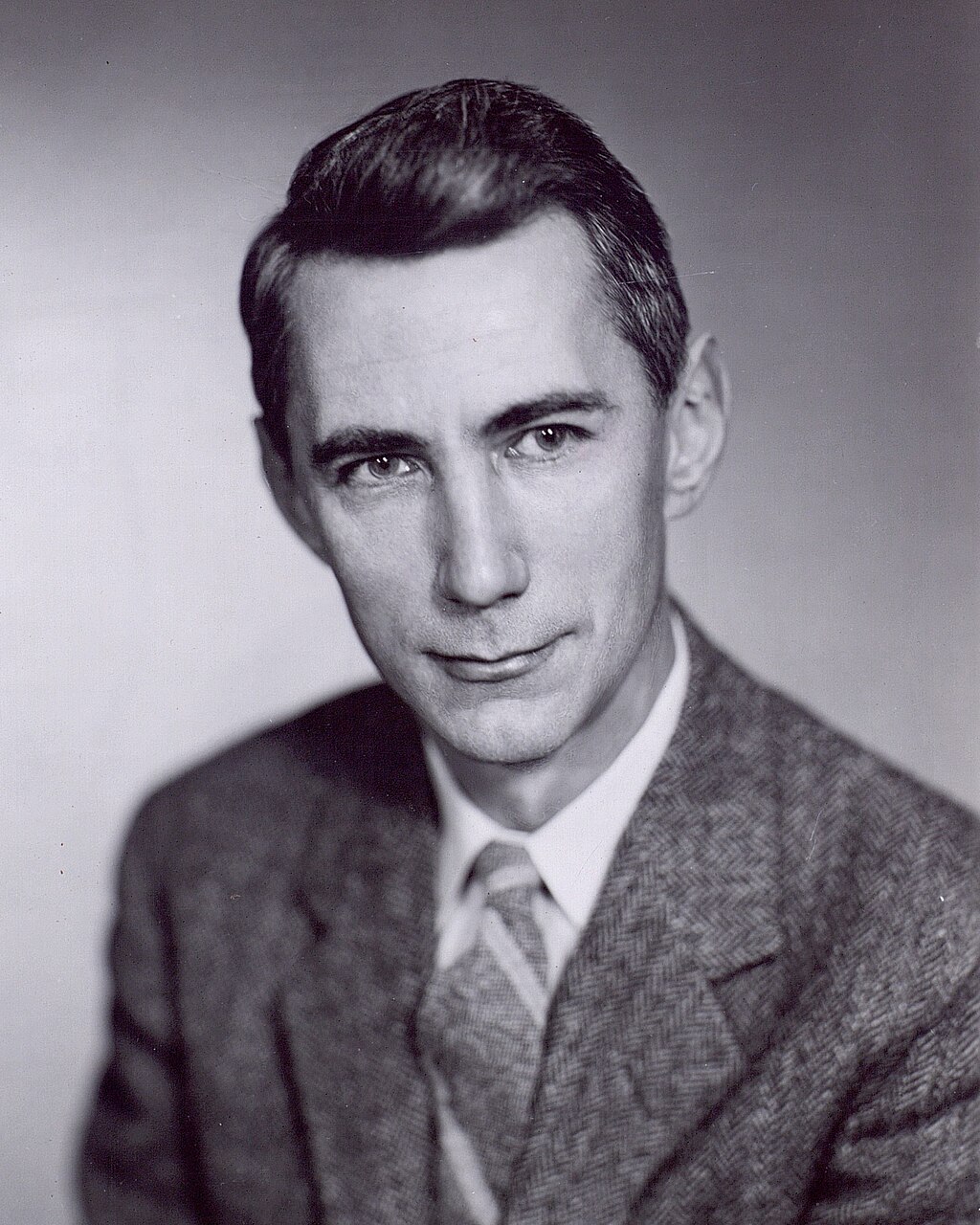

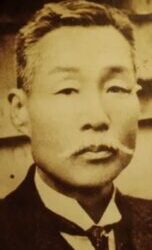

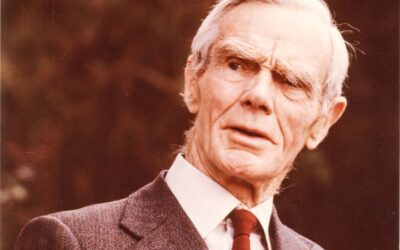

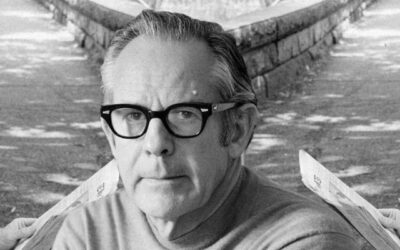

Dr. Warren Weaver, taken January 9, 1940. Cooksey-142

III. Biography of Warren Weaver

Warren Weaver was born on July 17, 1894, in Reedsburg, Wisconsin. Unlike Shannon, who was primarily a mathematician and engineer, Weaver’s background was more diverse, spanning mathematics, science administration, and the philosophy of science.

Weaver earned his bachelor’s degree in civil engineering from the University of Wisconsin in 1917. After serving in the U.S. Air Force during World War I, he returned to academia, completing his Ph.D. in mathematics at the University of Wisconsin in 1921.

From 1920 to 1932, Weaver taught mathematics at the University of Wisconsin, eventually becoming chairman of the mathematics department. In 1932, he joined the Rockefeller Foundation, where he would have a profound impact on the direction of scientific research in the United States.

As director of the Natural Sciences division at the Rockefeller Foundation from 1932 to 1955, Weaver played a crucial role in shaping the foundation’s research agenda. He was instrumental in supporting work in molecular biology, a term he coined in 1938, which would eventually lead to breakthroughs in genetics and biotechnology.

During World War II, Weaver served as head of the Applied Mathematics Panel of the U.S. Office of Scientific Research and Development, where he oversaw mathematical work related to the war effort, including operations research and the development of computing machines.

Weaver’s collaboration with Claude Shannon came in the late 1940s when he recognized the broader implications of Shannon’s work on information theory. In 1949, Weaver wrote an introduction to Shannon’s “The Mathematical Theory of Communication,” helping to make Shannon’s highly technical work accessible to a wider audience and extending its applications beyond engineering to fields such as linguistics and psychology.

In addition to his work on information theory, Weaver made significant contributions to the fields of machine translation and science communication. He served as president of the American Association for the Advancement of Science in 1954 and authored several books, including “Science and Complexity” (1948) and “Lady Luck: The Theory of Probability” (1963).

Warren Weaver passed away on November 24, 1978, leaving behind a legacy of interdisciplinary scientific collaboration and a broader understanding of information and communication.

IV. Overview of Key Ideas

At the core of Shannon and Weaver’s work is the concept of information theory, a mathematical framework for understanding the fundamental principles of communication. Their ideas revolutionized our understanding of information, providing a quantitative basis for measuring and analyzing communication processes.

Information Entropy

One of the most significant contributions of Shannon and Weaver’s work is the concept of information entropy. Shannon introduced this idea in his 1948 paper, defining it as a measure of the uncertainty or randomness in a message. Information entropy quantifies the amount of information contained in a message, independent of its meaning or content.

The formula for information entropy is:

H = -Σ p(x) log₂ p(x)

Where H is the entropy, p(x) is the probability of a particular symbol or event occurring, and the sum is taken over all possible symbols or events.

This concept has profound implications for data compression, coding theory, and our understanding of the nature of information itself. It allows us to quantify the minimum amount of data needed to represent a message, leading to more efficient communication systems.

Channel Capacity and Noise

Another key concept in Shannon and Weaver’s work is the idea of channel capacity. This refers to the maximum rate at which information can be reliably transmitted over a communication channel. Shannon’s channel capacity theorem states that it is possible to transmit information nearly error-free up to the channel capacity, but not beyond it.

Closely related to channel capacity is the concept of noise, which refers to any interference or distortion that affects the transmission of a message. Shannon and Weaver’s work provided a framework for understanding how to design communication systems that can overcome noise and achieve reliable transmission.

The Shannon-Weaver Model of Communication

Building on Shannon’s mathematical theory, Weaver developed a more general model of communication that has become widely influential in various fields. The Shannon-Weaver model consists of several key components:

- Information Source: The origin of the message.

- Transmitter: Encodes the message into signals.

- Channel: The medium through which the signal is transmitted.

- Noise Source: Any interference that affects the signal during transmission.

- Receiver: Decodes the signal back into a message.

- Destination: The intended recipient of the message.

This model provides a general framework for understanding communication processes, from interpersonal communication to mass media and digital networks.

Redundancy and Error Correction

Shannon and Weaver’s work also introduced the important concept of redundancy in communication. Redundancy refers to the repetition or predictability in a message that allows for error detection and correction. This idea has important applications in areas such as data transmission, where redundant information can be used to detect and correct errors caused by noise or interference.

Cryptography and Secrecy Systems

Drawing on his wartime work in cryptography, Shannon also made significant contributions to the theoretical foundations of secure communication. His work on cryptography, published in the paper “Communication Theory of Secrecy Systems” (1949), laid the groundwork for modern cryptographic techniques and the concept of perfect secrecy.

V. Implications for Psychology, Anthropology, and Media Studies

Shannon and Weaver’s work has had far-reaching implications across various disciplines, including psychology, anthropology, and media studies. Their ideas about the nature of information and communication have provided new frameworks for understanding human cognition, cultural transmission, and the impact of media technologies.

Relevance to Psychology

In the field of psychology, Shannon and Weaver’s information theory has influenced our understanding of cognitive processes, perception, and decision-making. The concept of information entropy has been applied to models of human memory and learning, providing insights into how the brain processes and stores information.

For example, the idea of redundancy in communication has been linked to the concept of cognitive load in psychology. Just as redundancy in a message can help overcome noise in a communication channel, redundancy in information presentation can aid in learning and memory retention by reducing cognitive load.

Moreover, the Shannon-Weaver model of communication has been influential in understanding interpersonal communication and social psychology. It has provided a framework for analyzing how messages are encoded, transmitted, and decoded in social interactions, and how various forms of “noise” (such as cognitive biases or cultural differences) can affect this process.

Relevance to Depth Psychology and Trauma Treatment

While Shannon and Weaver’s work was primarily focused on the technical aspects of communication, their ideas have interesting implications for depth psychology and trauma treatment. The concept of information entropy, which measures the uncertainty or randomness in a message, can be metaphorically applied to understanding the chaotic nature of traumatic experiences and memories.

In trauma treatment, one of the goals is often to help individuals process and integrate traumatic memories, which can be seen as reducing the “entropy” or disorder in their psychological state. The process of therapy can be viewed as a form of “error correction,” similar to how redundancy in a message allows for the correction of errors in transmission.

Furthermore, the Shannon-Weaver model of communication can provide a framework for understanding the therapeutic process itself. The therapist can be seen as a “receiver” decoding the “signals” sent by the patient, while various forms of psychological “noise” (such as defense mechanisms or transference) can interfere with this process.

Jungian analysts might find parallels between Shannon and Weaver’s concept of information and Jung’s idea of psychic energy. Just as information in Shannon’s theory is independent of meaning, Jung’s concept of libido as psychic energy is not tied to specific content but represents a quantitative aspect of the psyche.

Existential therapists, on the other hand, might see connections between the concept of information entropy and the existential notion of uncertainty and anxiety. The idea that increased information (or awareness) can lead to increased uncertainty resonates with existential themes of freedom and responsibility.

Relevance to Anthropology

In anthropology, Shannon and Weaver’s ideas have influenced studies of cultural transmission and the role of communication in shaping social structures. The concept of information entropy has been applied to analyses of cultural diversity and the evolution of languages and traditions.

For example, anthropologists have used information theory to study the complexity and diversity of cultural systems, treating cultural traits as “messages” that are transmitted across generations. The idea of channel capacity has been applied to understanding the limitations and affordances of different modes of cultural transmission, from oral traditions to written texts to digital media.

Moreover, the Shannon-Weaver model of communication has provided a framework for analyzing intercultural communication and the challenges of translating meaning across different cultural contexts.

Relevance to Media Studies

Perhaps the most significant impact of Shannon and Weaver’s work outside of engineering has been in the field of media studies. Their ideas about information, channels, and noise have profoundly influenced how we understand the nature and effects of media technologies.

Media theorist Marshall McLuhan, for instance, drew on Shannon and Weaver’s work in developing his famous dictum “the medium is the message.” McLuhan’s focus on the properties of media channels rather than the content of messages echoes Shannon’s emphasis on the quantitative aspects of information rather than its meaning.

The concept of noise in communication has been particularly influential in media studies, providing a framework for understanding how various forms of interference – from technical glitches to cultural misunderstandings – can affect the transmission and reception of mediated messages.

Furthermore, Shannon and Weaver’s work on information compression and channel capacity has direct relevance to the development of digital media technologies, from data compression algorithms used in digital audio and video to the design of network protocols for the internet.

VI. Historical and Cultural Context

Shannon and Weaver’s work emerged in a specific historical and cultural context that profoundly shaped their ideas and their reception. Understanding this context is crucial for appreciating the full significance of their contributions.

Post-War Scientific and Technological Development

Shannon and Weaver’s work came at a time of rapid scientific and technological development in the aftermath of World War II. The war had demonstrated the crucial importance of advanced communication and computation technologies, and there was significant government and industry investment in these areas in the post-war period.

Shannon’s work at Bell Labs, in particular, was part of a broader effort to improve and expand telecommunications systems to meet the needs of a growing economy and an increasingly interconnected world. The development of information theory was thus closely tied to practical engineering problems in telecommunications.

The Rise of Cybernetics and Systems Thinking

Shannon and Weaver’s work was part of a broader intellectual movement in the mid-20th century that emphasized systems thinking and the application of mathematical models to complex phenomena. This movement, often associated with the field of cybernetics, sought to develop general principles that could be applied across different domains, from biology to engineering to social sciences.

Norbert Wiener’s work on cybernetics, published in his 1948 book “Cybernetics: Or Control and Communication in the Animal and the Machine,” was particularly influential in this context. Wiener’s ideas about feedback loops and control systems complemented Shannon’s work on information theory, and together they formed the basis for much of modern systems theory and computer science.

The Cold War and Information Control

The development of information theory also took place against the backdrop of the Cold War, which placed a premium on technologies of communication, computation, and cryptography. Shannon’s work on secrecy systems, in particular, was directly relevant to Cold War concerns about secure communication and intelligence gathering.

Moreover, the emphasis on quantification and control in information theory resonated with broader Cold War ideologies that emphasized scientific management and technocratic control. The idea that communication could be reduced to quantifiable units of information aligned with efforts to rationalize and control social and political processes.

The Information Society and Post-Industrial Economy

Shannon and Weaver’s work anticipated and helped shape the emergence of what sociologists would later call the “information society” or the “post-industrial economy.” Their ideas about the nature of information and communication provided a theoretical foundation for understanding the increasing importance of information processing and communication in economic and social life.

As computers and digital communication technologies became more prevalent in the latter half of the 20th century, Shannon and Weaver’s ideas gained increasing relevance beyond their original context in telecommunications engineering. Their work provided a conceptual framework for understanding the digital revolution and its social and cultural implications.

VII. Critique and Legacy

While Shannon and Weaver’s work has been enormously influential, it has also been subject to various critiques and reinterpretations over the years. Understanding these critiques is crucial for appreciating the ongoing relevance and limitations of their ideas.

Critiques of the Information Concept

One of the main critiques of Shannon’s information theory is that it deals with information purely as a quantitative phenomenon, without regard to meaning or semantics. While this abstraction was crucial for developing a mathematical theory of communication, it has limitations when applied to human communication and cognition, where meaning is often central.

Some critics have argued that Shannon’s concept of information is too narrow and fails to capture important aspects of human communication, such as context, intention, and interpretation. This critique has led to the development of alternative theories of information that attempt to incorporate semantic and pragmatic aspects of communication.

Limitations of the Linear Model

The Shannon-Weaver model of communication, with its linear progression from sender to receiver, has been criticized for being overly simplistic and failing to capture the complex, interactive nature of many forms of communication. Critics argue that this model doesn’t adequately account for feedback loops, simultaneous multiple channels of communication, or the active role of the receiver in constructing meaning.

These critiques have led to the development of more complex, non-linear models of communication that emphasize the dynamic, contextual nature of human interaction.

Technological Determinism

Some critics have argued that Shannon and Weaver’s work, with its focus on the technical aspects of communication, can lead to a form of technological determinism – the idea that technology shapes society in predictable, inevitable ways. This critique suggests that their approach may underestimate the social and cultural factors that influence how communication technologies are developed and used.

Legacy and Ongoing Influence

Despite these critiques, Shannon and Weaver’s work continues to have a profound influence across a wide range of fields. In engineering and computer science, information theory remains a fundamental tool for designing and analyzing communication systems, from wireless networks to data compression algorithms.

In the social sciences and humanities, while the limitations of Shannon and Weaver’s approach are widely recognized, their ideas continue to provide valuable insights and metaphors for understanding communication processes. The concept of noise in communication, for instance, has been productively applied to studies of media effects, intercultural communication, and cognitive processing.

Moreover, Shannon and Weaver’s work has had a lasting impact on our understanding of the nature of information itself. The idea that information can be quantified and treated as a fundamental quantity, like matter or energy, has profoundly influenced fields ranging from physics to biology to philosophy.

In recent years, there has been renewed interest in Shannon and Weaver’s ideas in the context of the digital age and the explosion of data and communication technologies. Their work provides a crucial foundation for understanding phenomena such as big data, algorithmic processing, and artificial intelligence.

VIII. Relevance to Emerging Trends in Technology and Media

Shannon and Weaver’s ideas continue to be highly relevant to emerging trends in technology and media. Their work provides a theoretical foundation for understanding many of the key challenges and opportunities of the digital age.

Big Data and Information Overload

The concept of information entropy is particularly relevant in the era of big data. As we grapple with ever-increasing amounts of data, Shannon’s ideas about the relationship between information and uncertainty provide a framework for understanding the challenges of information overload and the value of data compression and summarization techniques.

Moreover, the tension between Shannon’s quantitative concept of information and the need for meaningful, actionable insights from data reflects ongoing debates about the limitations and possibilities of data-driven decision making.

Artificial Intelligence and Machine Learning

Shannon’s work on information theory has also been influential in the development of artificial intelligence and machine learning. The idea that learning can be understood as a process of reducing uncertainty (i.e., decreasing entropy) has informed various approaches to machine learning, particularly in areas such as information gain and decision tree algorithms.

Furthermore, Shannon’s work on game-playing machines and his speculations about artificial intelligence have been prescient in light of recent advances in AI. His paper “Programming a Computer for Playing Chess” (1950) laid some of the groundwork for later developments in game-playing AI.

Quantum Information Theory

In recent years, Shannon’s ideas have been extended into the realm of quantum mechanics, leading to the development of quantum information theory. This field applies information-theoretic concepts to quantum systems, with implications for quantum computing and quantum cryptography.

The development of quantum information theory demonstrates the ongoing fertility of Shannon and Weaver’s ideas, showing how they can be adapted and extended to new domains of inquiry.

Social Media and Network Communication

The Shannon-Weaver model of communication, while originally developed for technical systems, provides a useful starting point for understanding the complexities of social media and network communication. Concepts such as noise, channel capacity, and redundancy can be applied to analyzing phenomena such as information spread on social networks, echo chambers, and viral content.

Moreover, the challenges of misinformation and disinformation in the digital age can be understood partly through the lens of Shannon and Weaver’s ideas about noise and error correction in communication systems.

IX. Implications for Historical Materialism and Media Psychology

Shannon and Weaver’s work, while primarily focused on the technical aspects of communication, has interesting implications when viewed through the lenses of historical materialism and media psychology.

Historical Materialism Perspective

From a historical materialist perspective, Shannon and Weaver’s work can be seen as both a product of and a contributor to the changing material conditions of mid-20th century capitalism. Their development of information theory was deeply tied to the needs of the telecommunications industry and the broader shift towards an information-based economy.

The quantification and commodification of information implicit in Shannon’s theory aligns with the capitalist logic of transforming all aspects of life into measurable, exchangeable units. In this sense, information theory can be seen as part of the broader process of the “real subsumption” of communication under capital.

At the same time, Shannon and Weaver’s work also contributed to changes in the forces of production, helping to lay the groundwork for the digital revolution and the emergence of what some theorists have called “cognitive capitalism” or “informational capitalism.”

The tension between the liberating potential of information technology (e.g., the democratization of knowledge) and its use as a tool of control and exploitation (e.g., surveillance capitalism) can be seen as reflecting the broader contradictions of capitalist development identified by historical materialist analysis.

Media Psychology Perspective

From the perspective of media psychology, Shannon and Weaver’s work provides a foundation for understanding how media technologies shape cognitive processes and psychological experiences.

The concept of channel capacity, for instance, has implications for understanding cognitive load and information processing in media consumption. The increasing complexity and speed of modern media environments can be analyzed in terms of their demands on human information processing capacities.

Moreover, the idea of noise in communication systems can be applied to understanding psychological phenomena such as cognitive dissonance, selective attention, and confirmation bias in media consumption. These psychological “noise” factors can be seen as interfering with the transmission and reception of mediated messages.

The Shannon-Weaver model’s emphasis on the process of encoding and decoding messages also aligns with psychological theories of schema and mental models in media comprehension. The way individuals interpret media messages can be understood as a process of decoding shaped by prior knowledge and expectations.

X. Quotes and Key Passages

To further illustrate the ideas and impact of Shannon and Weaver, here are some key quotes from their work and from scholars discussing their contributions:

“The fundamental problem of communication is that of reproducing at one point either exactly or approximately a message selected at another point.” – Claude Shannon, “A Mathematical Theory of Communication” (1948)

This quote encapsulates the core problem that Shannon’s information theory sought to address, focusing on the technical aspects of transmitting messages accurately.

“Information is a measure of one’s freedom of choice when one selects a message.” – Warren Weaver, introduction to “The Mathematical Theory of Communication” (1949)

Here, Weaver provides an intuitive explanation of Shannon’s concept of information, linking it to the idea of choice and uncertainty.

“The word communication will be used here in a very broad sense to include all of the procedures by which one mind may affect another.” – Warren Weaver, “The Mathematics of Communication” (1949)

This quote demonstrates Weaver’s efforts to extend Shannon’s technical theory to broader contexts of human communication.

“Shannon’s theory, in a word, is about information, not meaning.” – James Gleick, “The Information: A History, a Theory, a Flood” (2011)

Gleick succinctly captures both the power and the limitations of Shannon’s approach to information.

“Shannon’s work on information theory has been called the ‘Magna Carta of the Information Age’… No other work in this century has had a greater impact on science and engineering.” – John Pierce, “An Introduction to Information Theory: Symbols, Signals and Noise” (1980)

This quote reflects the immense influence and significance attributed to Shannon’s work across various fields of study.

XI. Timeline of Key Events

1916: Claude Shannon born in Petoskey, Michigan

1894: Warren Weaver born in Reedsburg, Wisconsin

1937: Shannon completes his master’s thesis, “A Symbolic Analysis of Relay and Switching Circuits,” applying Boolean algebra to circuit design

1941: Shannon joins Bell Labs

1948: Shannon publishes “A Mathematical Theory of Communication” in the Bell System Technical Journal

1949: Shannon and Weaver’s book “The Mathematical Theory of Communication” is published, including Weaver’s introductory essay

1950: Shannon publishes “Programming a Computer for Playing Chess,” one of the first papers on computer chess

1956: Shannon leaves Bell Labs to join MIT faculty

1963: Weaver publishes “Science and Imagination”

1978: Warren Weaver dies

1985: Shannon retires from MIT

2001: Claude Shannon dies

Digital, Media, and Cultural Theorists and Philosophers

Bernays and The Psychology of Advertising

Claude Shannon and Warren Weaver

The Left and Right Hand Path in Cultural Psychology

XII. Conclusion

Claude Shannon and Warren Weaver’s work on information theory represents a watershed moment in our understanding of communication and information. Their ideas have had a profound and lasting impact across a wide range of fields, from engineering and computer science to psychology, linguistics, and media studies.

By providing a mathematical framework for understanding information and communication, Shannon and Weaver helped lay the groundwork for the digital revolution and the emergence of the information society. Their work continues to be relevant today, providing crucial insights into the challenges and opportunities of our increasingly data-driven, networked world.

At the same time, the limitations and critiques of their approach remind us of the complexity of human communication and the importance of considering factors beyond the purely quantitative aspects of information. The ongoing engagement with Shannon and Weaver’s ideas across various disciplines demonstrates both their enduring value and the need for continued development and refinement of our understanding of information and communication.

As we navigate the challenges of the digital age – from information overload and algorithmic bias to the promises and perils of artificial intelligence – Shannon and Weaver’s work continues to provide a crucial foundation for understanding the nature of information and the processes of communication that shape our world.

XIII. Bibliography

Primary Sources

Shannon, C. E. (1948). A Mathematical Theory of Communication. The Bell System Technical Journal, 27, 379–423, 623–656.

Shannon, C. E., & Weaver, W. (1949). The Mathematical Theory of Communication. University of Illinois Press.

Shannon, C. E. (1950). Programming a Computer for Playing Chess. Philosophical Magazine, Ser. 7, Vol. 41, No. 314.

Weaver, W. (1949). Recent Contributions to the Mathematical Theory of Communication. In C. E. Shannon & W. Weaver, The Mathematical Theory of Communication. University of Illinois Press.

Secondary Sources

Aspray, W. (1985). The Scientific Conceptualization of Information: A Survey. Annals of the History of Computing, 7(2), 117-140.

Gleick, J. (2011). The Information: A History, a Theory, a Flood. Pantheon Books.

Pierce, J. R. (1980). An Introduction to Information Theory: Symbols, Signals and Noise. Dover Publications.

Floridi, L. (2010). Information: A Very Short Introduction. Oxford University Press.

Soni, J., & Goodman, R. (2017). A Mind at Play: How Claude Shannon Invented the Information Age. Simon & Schuster.

Gallager, R. G. (2001). Claude E. Shannon: A Retrospective on His Life, Work, and Impact. IEEE Transactions on Information Theory, 47(7), 2681-2695.

Verdú, S. (1998). Fifty Years of Shannon Theory. IEEE Transactions on Information Theory, 44(6), 2057-2078.

Brier, S. (2008). Cybersemiotics: Why Information Is Not Enough! University of Toronto Press.

Day, R. E. (2001). The Modern Invention of Information: Discourse, History, and Power. Southern Illinois University Press.

Kline, R. R. (2004). What Is Information Theory a Theory of? Boundary Work among Information Theorists and Information Scientists in the United States and Britain during the Cold War. In The History and Heritage of Scientific and Technical Information Systems: Proceedings of the 2002 Conference (pp. 15-28). Information Today, Inc.

0 Comments